Getty Images/iStockphoto

Design your IoT device battery life

IoT engineers have several tactics they can use to extend the battery life of devices, such as energy harvesting, power saver mode and efficient hardware design.

Ever since the day Kevin Ashton coined the term the "internet of things," IoT has scaled rapidly to meet diverse needs. Medical devices, smart meters, connected cars, smart agriculture and smart infrastructure -- you name the application, and there is a way an IoT sensor can play a part. The applications are boundless. IoT has become the internet of everything.

The number of connected devices worldwide will reach approximately 50 billion by 2030, Statista estimated in a recent report. These devices or systems will talk to each other, capture and analyze data and send it to the cloud for storage or further processing. To take it a step further, there is an increased interest in AI processing at the edge, making a smart device even smarter.

The growing need for applications and data processing on smart devices has created a challenge for device designers: how to get more out of the battery. Prolonging or eliminating the need for battery replacements cuts maintenance costs and gives the IoT device a competitive edge. More than anything else, the quest for lower power consumption is pervasive throughout the device design cycle. Battery-powered IoT devices will need to work remotely, relying solely on small batteries.

Design engineers will find ways to conserve energy by balancing active functions and deep sleep mode; however, this method has limits. Some wearables, especially smart medical devices -- such as pacemakers -- need certain circuit parts to be active for continuous monitoring. The active part of the circuit must be on at all times, even when other functions are in deep sleep mode. That activity limits the battery life. The circuit design needs to compensate for this limitation to meet the battery life requirements of the applications. Designers also face challenges with IoT devices that require continuous wireless connectivity, which conflicts with requirements for extended battery life. Wireless communication is often the largest consumer of power in active devices.

Fortunately, many techniques are available to make electronic circuitry more power efficient, while extending battery lifetime.

Energy harvesting

Energy harvesting is a method of collecting energy from the environment and converting it to energy that can power electronic circuitry. Methods of harvesting energy from the external environment include thermoelectric conversion, solar energy conversion, wind energy conversion, radio frequency (RF) signal and vibrational excitation.

RF energy harvesting captures ambient electromagnetic energy and converts it into a usable continuous voltage direct current (DC) with an antenna and a rectifier circuit. The presence of ambient RF energy in the environment results from numerous high-frequency technologies, including Wi-Fi signals, microwave ovens and radio broadcasting. Vibrational excitation energy comes from the subtle vibration of floors, machinery or automobile chassis. The methods of sequestering ambient energy vary widely, depending on the frequency and amplitude of the available energy. Today, several companies create energy-harvesting chips that eliminate the need for battery replacements for low-power IoT devices.

Wireless standards

Wireless connectivity standards, cellular and noncellular, have developed features and optimization techniques to help maximize IoT device battery life. Wireless standards, such as LTE-M and 802.11 wireless LANs, have features such as power save mode and extended discontinuous reception to lower power consumption.

Power save mode allows the IoT device to be in a sleep or dozing state at a fixed time, waking up only to transmit and monitor data before going back to sleep, all the while remaining registered with the network. The device and the network negotiate and optimize the timing based on the application's requirements. Because the IoT device is inactive during power save mode, power consumption is lower, helping prolong the battery life.

Extended discontinuous reception can be incorporated into IoT devices as an extended LTE feature, working independently of power save mode to obtain additional power savings. The feature greatly extends the time interval during which an IoT device is not listening to the network. Although it doesn't provide the same level of power reduction as power save mode, extended discontinuous reception may be a good compromise between device reachability and power consumption.

Device design

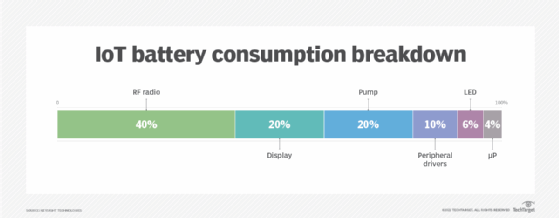

Design engineers strive to make electronic circuitry more power efficient. Certain hardware design, software or firmware changes can cause the circuitry to draw more power, making it less power efficient. IoT device power consumption might differ with varying climatic conditions as well. Designers often analyze how an IoT device consumes power in different scenarios by capturing and breaking power consumption down to hardware subsystems. From there, they can identify the necessary design changes to optimize battery life. They must repeat these steps to analyze and verify the effects of each design change.

This process is known as event-based power consumption analysis. Designers need to correlate the charge consumption profile to the RF or DC event of a subsystem. As described, the process to optimize device design can be difficult and time-consuming. Designers need to simulate the environment, capture and compare current consumption data and make design changes. They might also need to repeat the process all over again. Take, for example, a LoRa smart sensor for agriculture applications. To improve the battery life estimation, the designer would need to capture the dynamic current consumption of the sensor in a chamber as it transitions between operating states and simulate the temperature profile throughout the day. Through the data log, the designer can calculate battery life based on the charge-consumption profile and decide on the part of the circuitry, software or firmware that needs improvement.

Products on the market can help reduce test development and testing time and easily estimate an IoT device's battery life so designers can spend more time on what's important: the device design.

Most people can relate to the anxiety caused by a low mobile phone battery. IoT devices are no different, whether it's a smartwatch, a medical device or a smart sensor for agriculture applications. Battery runtime is one of the most essential criteria that influences the end user's buying decision. It can give your IoT device a competitive edge or destroy your brand's reputation. Fortunately, available technology and solutions can help product makers and device designers make informed decisions to manage power efficiently and optimize their IoT device's battery life.

About the author

Janet Ooi is a lead in Keysight IoT Industry and Solutions Marketing. She graduated from Multimedia University in 2003 with Business Engineering Electronics with honors majoring in telecommunications. She worked at Intel as a process and equipment engineer for five years before joining Agilent Technologies -- now known as Keysight Technologies -- in 2008 as a product marketing engineer. Since then, Janet has also taken on product management, business development and market analyst roles.